🤖 What Is an AI Agent?

In the evolving landscape of artificial intelligence, the concept of AI agents has gained significant attention — especially those powered by Large Language Models (LLMs). While there is no universally agreed-upon definition, AI agents can generally be described as:

“A system that uses an LLM to reason through a problem, generate a plan, and execute that plan using a set of tools.”

Unlike traditional chat-based applications that passively respond to prompts, LLM-based agents are capable of autonomous decision-making. These agents are designed with a blend of reasoning, memory, and action execution, enabling them to handle complex tasks with minimal human intervention.

The agent paradigm first gained traction through open-source projects like AutoGPT and BabyAGI, which demonstrated how LLMs could autonomously manage multi-step tasks. These systems were capable of interpreting user objectives, breaking them into actionable goals, and executing them by calling tools and services — often without continuous human oversight.

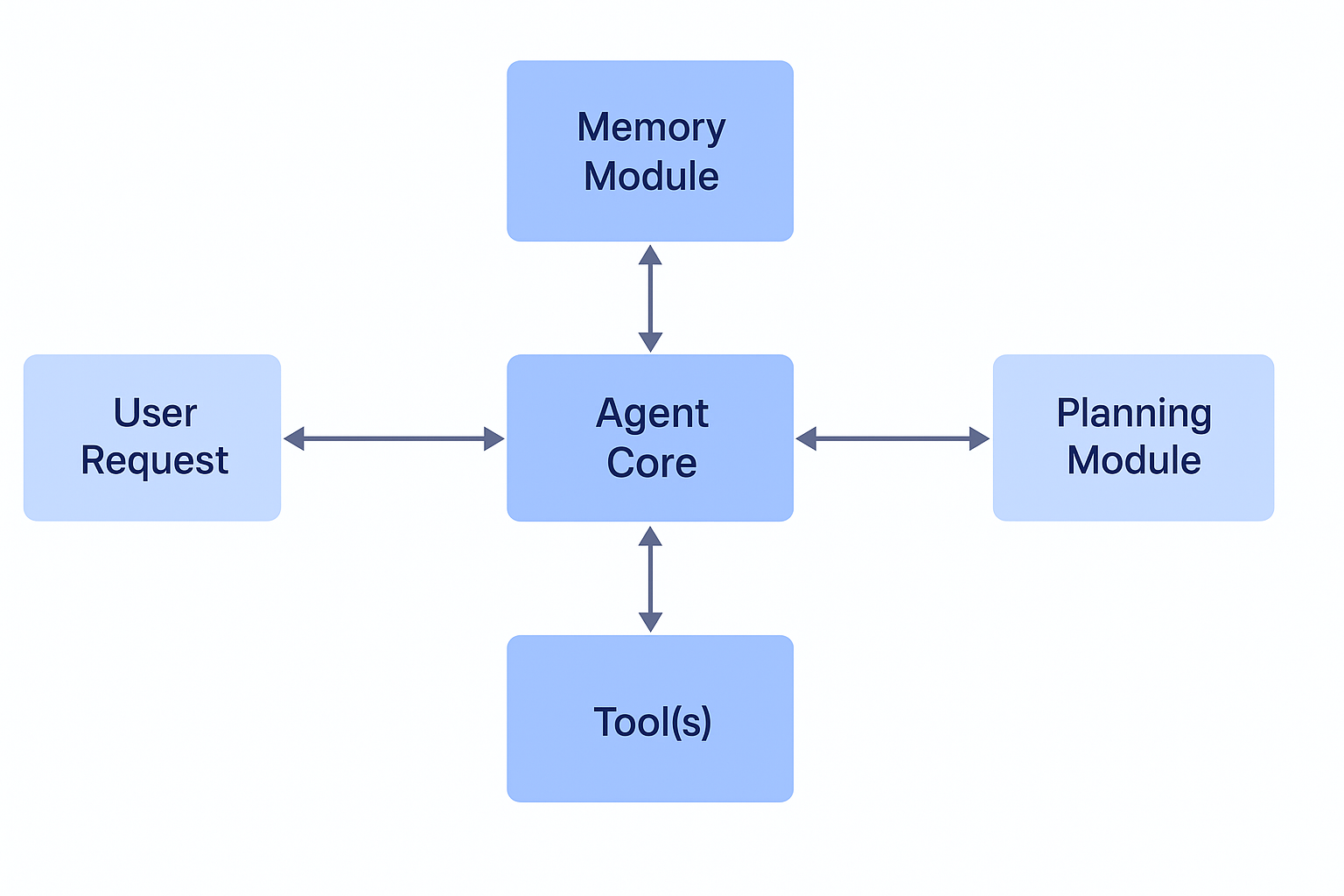

🧠 Key Components of the Agent Architecture

✅ This modular design enables the agent to be flexible, reusable, and scalable. Developers can plug in different tools, models, or memory backends depending on the application’s needs.

📖 For a complete breakdown and real-world use cases of LLM-powered AI agents, check out the full article on the official NVIDIA blog.